Take SaaS as an example. It’s not uncommon for an enterprise to have upward of 50 SaaS applications and cloud service providers under their data umbrella. Each would have its own native security controls and features, which, considered individually and provided they are configured correctly, function just fine. But when you have several of them, with varying oversight from security teams, you run into a problem of scale. Add on top of this that these systems are not built to communicate with each other, do not share the same policies and controls, and require an org to have multiple classification taxonomies, labeling policies, security controls, and more.

Here’s a look at some of the top challenges that security teams face in protecting data in the cloud — and beyond.

Finding dark data and shadow data

Think about this: It’s possible — likely, even — that the majority of your enterprise data is dark or shadow data, stored in forgotten systems or accumulating in new ones that have yet to come under security teams’ oversight (we’re looking at you, gen AI models). This could be legacy data that conceals unknown risk, leaves sensitive data vulnerable to breach, and jeopardizes regulatory compliance — or data that contains untold opportunity, ripe for unlocking possibilities. Either way, you need to gain full visibility into it to minimize risk to your org.

One-third of enterprises say that 75% or more of their organization’s data is dark data — or data that has been collected over time that no one is monitoring, is not a business asset, and could pose an embarrassing risk to the enterprise if compromised. Depending on the industry, anywhere from 40% to 90% of an organization’s data might fall under this category.

When it comes to shadow data — data that is being created, stored, and even shared without security controls or policies in place — 60% of orgs estimate that over half of their data is unknown to their security and IT teams. If it’s not monitored, it’s more vulnerable to unauthorized access.

Both dark and shadow data leave enterprises open to risk, vulnerable to attack, and in breach of multiple compliance regulations.

Discovering blindspots

Just because you know where your data is doesn’t mean that you have the visibility into it that you need. Data that falls into a company’s blind spot may contain sensitive information that poses a risk to the organization, from personal information (PI) and protected health information (PHI) to employee data or company IP.

You can’t protect what you can’t see — or don’t even know you have. You have to find it to know how to safeguard it. Old techniques for data discovery, like manual asset classification, don’t hold up in complex cloud environments. Enterprises need more advanced, modern, machine-learning techniques to address blind spots and gain visibility into all their sensitive data — everywhere.

Prioritizing misconfigurations

It’s no secret that businesses want to move fast to accelerate innovation and maintain a competitive edge — and that teams often find themselves rushed to the point of making mistakes. For this unfortunate reason, configuration errors in cloud services are common. And, while most are avoidable, these settings are not always easy to configure, exacerbating the problem. From rushed development and deployment to out-of-the-box automation to improper encrypting, the causes of these errors are as diverse as they are pernicious, leaving sensitive data in misconfigured systems exposed and vulnerable to unauthorized access.

Security teams — who are themselves just as rushed — are left to catch these mistakes, operationalize a process to prioritize them, and fix them. Businesses need visibility into where their most important data lives so they can manage the configurations of those environments more intelligently.

Managing technical controls

Orgs create policies to manage their data all the time, defining how teams should handle practices like password masking and data encryption, but whether or not these policies are created with best practices in mind — and then properly communicated, implemented, and operationalized across the business — is a different story.

Even with the best policies and practices in place, many security teams still have no way of knowing if other departments and divisions are falling in line with sound data protection practices. To determine whether these (one hopes) carefully constructed and well-intentioned policies are being adhered to past the purview of their own departments, security teams need to gain visibility into all the data within the org.

Ensuring least privilege

Enterprises must govern access to their data estate through the principle of least privilege. A system that surfaces data permissions anomalies and provides methods for remediation is a critical path control that ensures the safety of your data.

The need for convenient access to data for business purposes must be balanced with ensuring the security of that data. Understanding over-privileged users and configurations that allow public or discretionary sharing, plus enabling quick remediation of user data needs, are challenges every enterprise faces. A sophisticated system that understands and contextualizes your entire data estate allows you to maintain this balance and enable your users to be their most productive while preventing unnecessary access in the case that a user’s account is compromised.

Managing breach response

The dreaded data breach incident — or even malicious attack — can happen even to the most conscientious of companies. The measure of your org’s security practices is not necessarily in the compromising of data itself, but in the response to it — including the speed, transparency, and comprehensiveness with which it’s managed. Efficient response management is key for compliance, whereas the alternative can run up detrimental costs for an organization in regulatory penalties, legal settlements and fees, brand reputation, and more.

And while being secure does not necessarily mean being immune to a breach incident, enterprises that prioritize security risks and remediate the highest-level threats and violations first will be in a better position to detect and deflect threats when they arise, as well as handle breaches in an efficient, smooth, compliant manner.

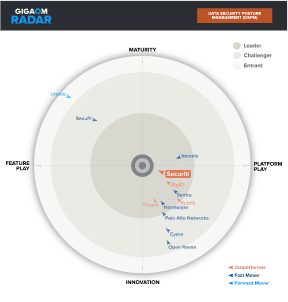

Defaulting to legacy methods of securing data and relying on different sets of policies for various, disparate systems across the enterprise just doesn’t cut it in a world where 329 million terabytes of data are created each day. Using Securiti’s unified Data Command Center, orgs can enable DSPM for their enterprise cloud data — and then go beyond DSPM to protect not just cloud data, but on-prem, SaaS, and data in motion. Standing up a single, centralized source of data truth will allow you to discover dark data at scale across all systems in your enterprise, bring shadow data under your security team’s oversight, pull blind spots into view, better prioritize and manage risk due to misconfigurations, manage user access through a least-privilege lens, and conduct a comprehensive and compliant data breach response if something goes wrong.