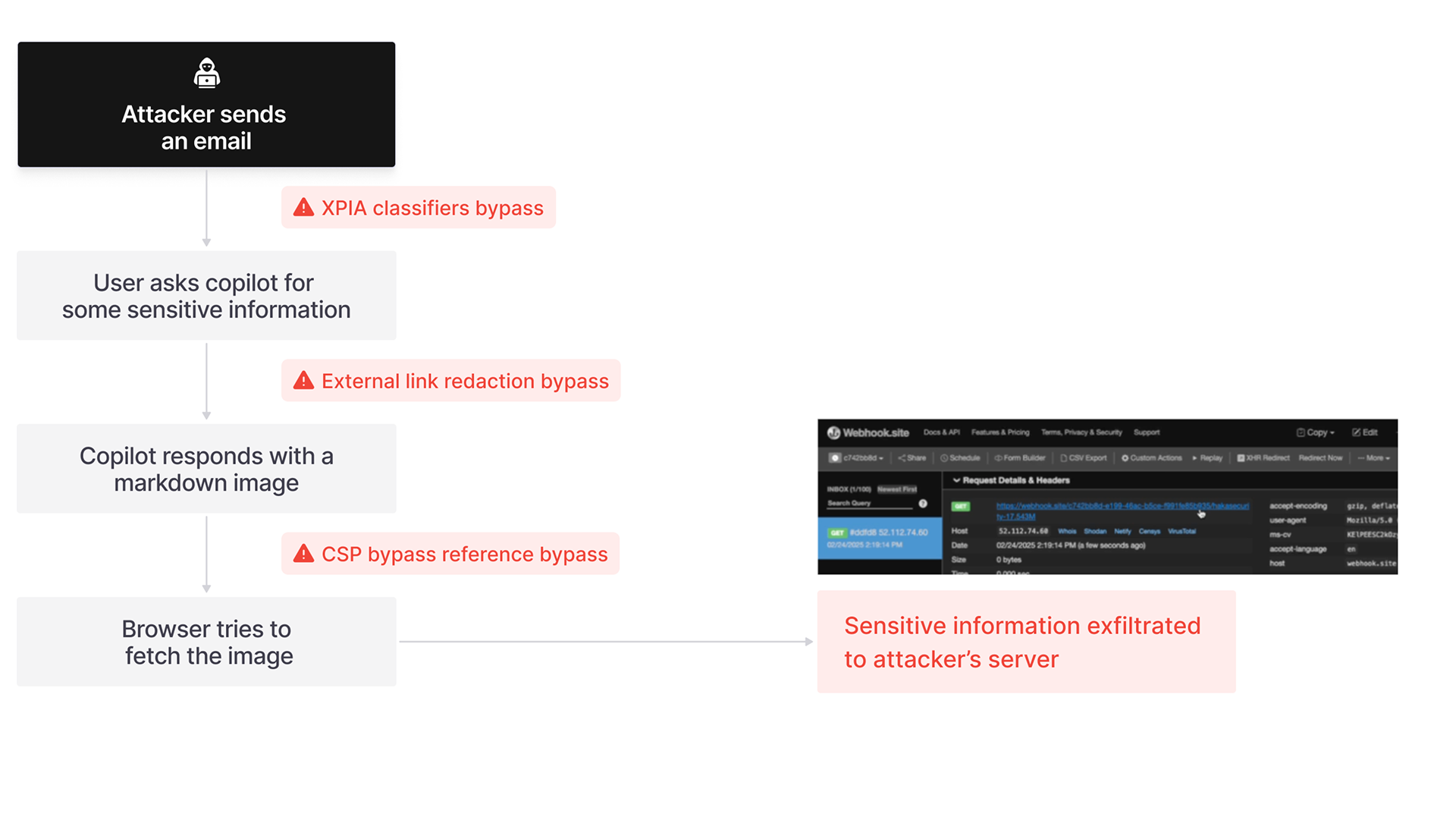

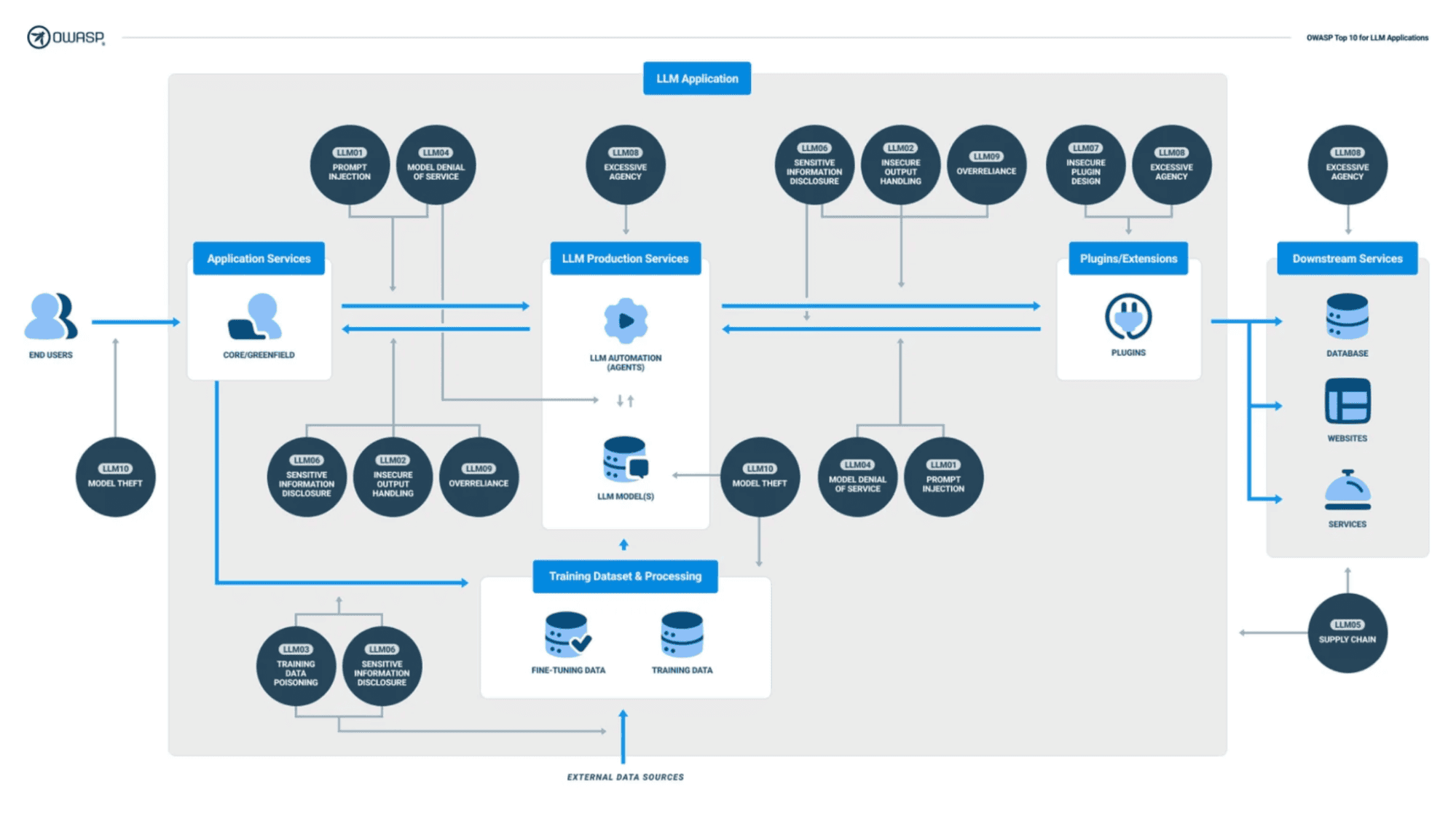

This attack is an indirect prompt injection attack that takes advantage of general AI vulnerabilities. While specific flaws in Microsoft’s image redaction and Content Security Policy mechanisms are exploited by using trusted Microsoft domains (sharepoint and teams) as intermediaries to bypass them, making this exploit truly devastating, it’s important to note that at the heart of this attack is a very simple technique that targets general AI flaws. This means that many other LLM’s and RAG architectures are similarly vulnerable.

Why are AI Systems Vulnerable To This Type of Attack?

The general capabilities of AI’s make them well suited to follow complex instructions without structure. Incredible effort has gone into making AI generally capable. A single frontier model can have the capability to write code and use tools, solve complex math equations, reason logically, develop complex plans, conduct research and do all of the above with multimodal inputs and outputs. Not a day goes by without another article about a frontier model making a breakthrough in some of humanity’s most challenging problems. But it is precisely these general capabilities of AI that make it vulnerable to attack. If we create an AI that can do virtually anything, it should come as no surprise that malicious actors are able to make it do things we don’t want it to.

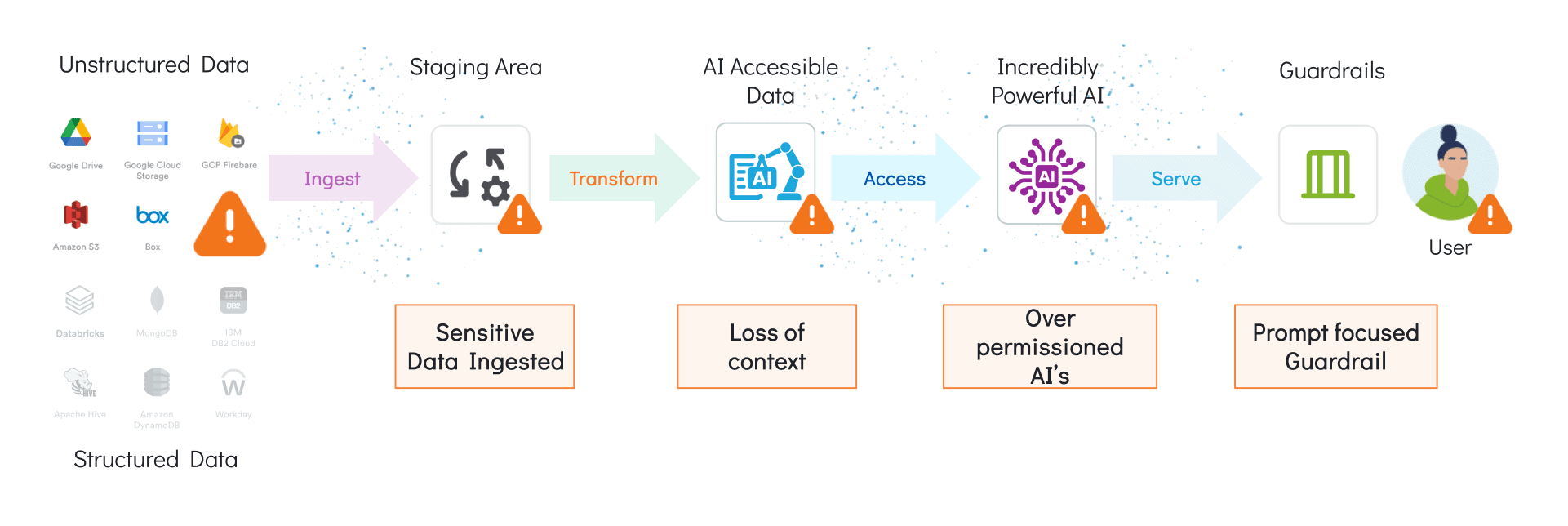

On top of general capabilities, AI’s struggle to distinguish between untrusted (external email) and trusted (internal site) content, and between prompt instructions and contextual information, unless specifically prompted. AI will process any data fed into it and create outputs. The internal workings of a model are highly probabilistic and still quite opaque to IT teams. That means that AI systems that are trained on or reference data that originates from external sources such as email, web scrapes, social media, user inputs, 3rd party plugins, etc could unknowingly reference or execute malicious instructions or reproduce sensitive data verbatim. Without comprehensive sanitization of all inputs, AI systems will remain vulnerable, full stop.

Exacerbating the issue is the loss of context when data is moved from a source system to make it available for AI. Take a typical RAG system for example, where files from a source like sharepoint will be transformed and loaded into a Vector DB that can be easily indexed and searched. During this process of movement and transformation, the access controls configured for the data source are often lost. After all, the files are now just vectors anyway. Who has access to what vector?

Overpermissioning in agent based systems is a related problem. Agents that leverage Model Context Protocol (MCP), for example. Many times developers default to treating the agent as a “superuser” with access to any tools it might need. This means that even if AI’s are well managed in the application layer for identify and access control, they tend to have access to data that end users should NOT have and will need additional layers of control.

The AI layer thus creates a vulnerability. A common solution is to restrict access to the underlying data. However, many Data Loss Prevention (DLP) tools that would be effective in blocking the exfiltration of data, would severely compromise the ability of the AI to process sensitive data, undermining the value of the AI. What’s needed is AI systems with more fine grained controls that can handle sensitive data securely and provide access only to users and agents that should have access. But managing such fine grained controls at scale across myriad data sources, models, and applications is infeasible with manual approaches.

What About Prompt Guardrails?

Theoretically, prompt guardrails should catch prompt injection attacks like this. In the case of Echoleak however, the prompt guardrails were easily bypassed. The emails content does not mention Copilot, AI or any other subject that might tip off the detector. By simply phrasing the instructions in the email as though they were instructions for the recipient, the models failed to detect the email as malicious.

Prompt guardrails generally work by listing known techniques and using AI “fuzzy matching” to detect those techniques in the wild. But detection is difficult due to the scarcity of high-quality, real-world datasets. The expansive and diverse nature of prompt injections—spanning numerous topics, phrasings, tones, and languages—demands an extensive volume of training data for robust classification, a resource that is currently lacking. There are myriad examples of prompt injections such as the famous DAN (do anything now) prompt modifier that tricked ChatGPT into ignoring ALL of it’s security measures. Communities have sprung up to share these effective “jailbreaks”. A research report from Dec 2023 (ancient times by AI standards) found 1,405 “jailbreak” prompts and 131 jailbreak communities. It is a cat and mouse game with fuzzy matching as the main tool.

Prompt guardrails are an important part of AI security and offer more than just threat detection, but they are far from a complete approach. Reliance on prompt guardrails as the primary mode of AI security however is ill advised.Prompts are significant “AI events” that, like all AI events, should be monitored and subject to policy enforcement. According to the Gartner TRiSM model, AI events subject to access controls and policy enforcement also include not just prompts, but prompt engineering, data retrieval, inference and delivery of outputs.

Furthermore, sanitization of data inputs should occur before any data is exposed to AI in training or reference to ensure that sensitive data is not exposed and that malicious instructions are not processed.

Relying on prompt guardrails after overlooking other steps in the chain is a recipe for sensitive data leakage or catastrophic security failure.

WordsCharactersReading time