DSPM Must Address the Unique Requirements of Data & AI Laws

Data protection laws and regulations are designed to ensure the integrity of data, its fair use and processing, and, more importantly, to uphold users’ right to privacy. However, as AI has become a cornerstone of almost every new technology released into the wild, the need for more AI-focused regulatory guidelines continues to grow. With this in view, governments across the globe have proposed or enacted AI laws, such as the EU AI Act, the US Executive Order 14110, and the FTC AI guidelines, to name a few.

Take, for instance, the EU AI Act’s Article 10–Data and Data Governance. The article requires covered entities to train, validate, and test datasets according to best data governance and management practices. Datasets must use relevant data preparation practices like labeling, cleaning, sanitization, or aggregation. Covered entities are further required to adopt effective measures for detecting, preventing, or mitigating bias. Similar and more regulations regarding AI training data, model access entitlements, etc., can be found in various other AI laws.

The shift to AI and associated laws requires DSPM solutions to cover all these aspects and offer a robust, automated compliance feature. The feature may include autonomous compliance reporting and Data + AI risk assessments.

DSPM Should Detect & Mitigate Toxic Combinations

Google defines toxic combinations as “a group of security issues that, when they occur together in a particular pattern, create a path to one or more of your high-value resources that a determined attacker could potentially use to reach and compromise those resources.“

Take, for instance, a cloud environment that may have multiple security concerns, such as a misconfigured bucket, publicly exposed storage, unintended access to AI training data, etc. When viewed individually, these risks may seem manageable. However, when combined, they may create a catastrophic effect.

The need to formulate solutions to mitigate toxic combinations has grown massively recently due to AI. The technology has added an extra layer of complexity to an already complex IT infrastructure. This has further led enterprises to overlapping configuration issues, creating toxic combinations across environments. To put things into perspective, 82% of security leaders have shown concerns that AI will amplify toxic combinations.

Traditional solutions like SIEM generate too many alerts, making it difficult for analysts to filter through the noise and prioritize critical alerts. Moreover, alerts are often ignored due to a lack of data context. Toxic combinations help determine which risks are more critical and prioritize remediation efforts accordingly.

DSPM solutions need to adopt a graph-based approach to security to detect and remediate toxic combinations. The graph should provide insights into the relationship between sensitive data, systems, applications, or AI models, highlighting toxic combinations so they do not go unnoticed.

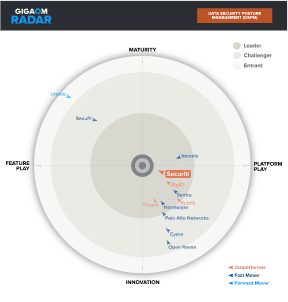

Interested in learning how Securiti’s DSPM solution can help you evolve your data security strategy? Check out our on-demand webinar, GigaOm DSPM Radar Highlights: Your Guide to Data+AI Security.